Resarch

I’m interested in machine learning and deep learning in general. My current research focuses mainly on the practical side of ML, i.e., developing effective ML tools and pipelines for diverse applications in real life. I’m particuarly intereted in enhancing LLM’s interaction with real-world applications by developing efficient and unified multi-modal models and building LLM agents capable of environment (e.g., browsers, command lines, IDEs) and user interactions. Besides, I also study:

-

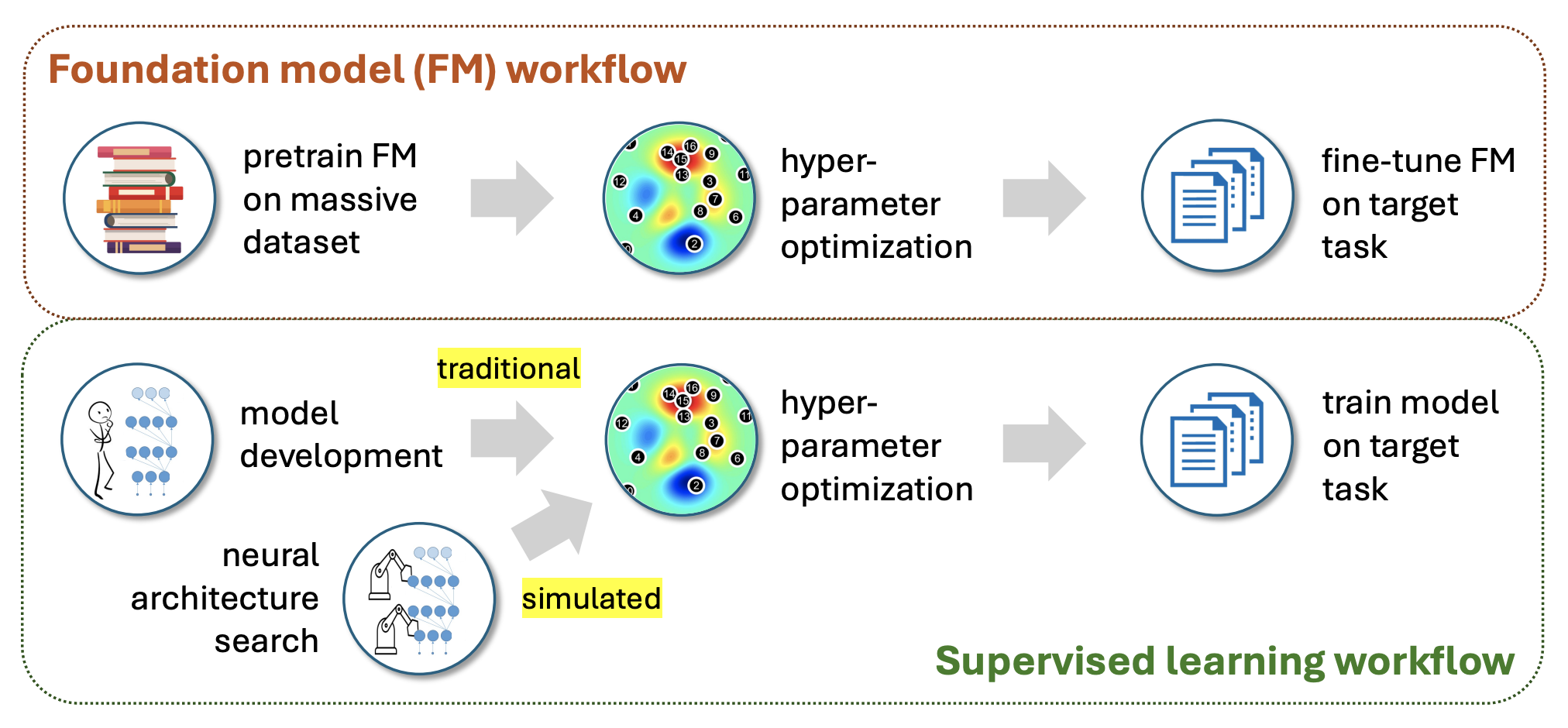

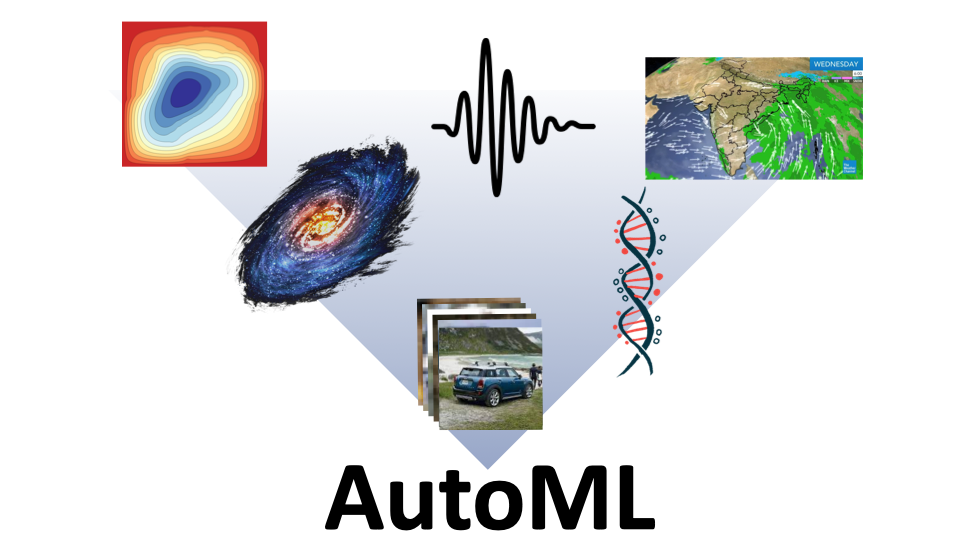

Automated machine learning (AutoML): how do we use neural architecture search (NAS) to generate effective and task-specific neural network architectures for different downstream problems?

-

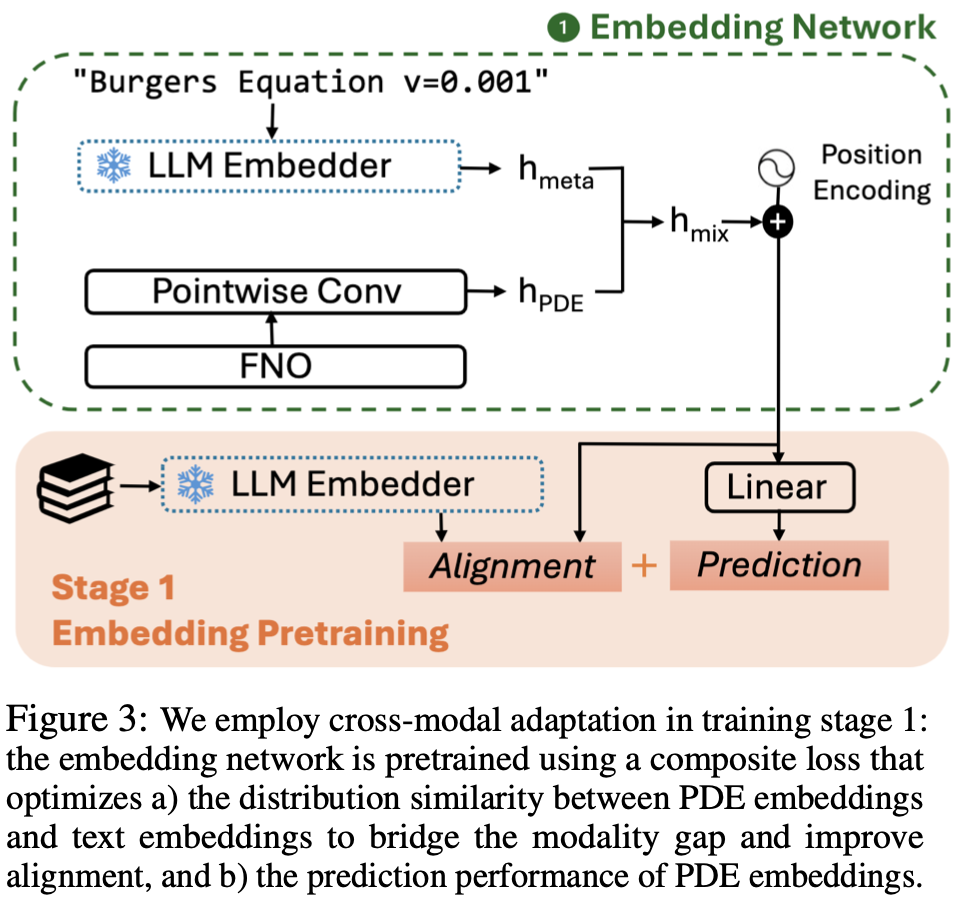

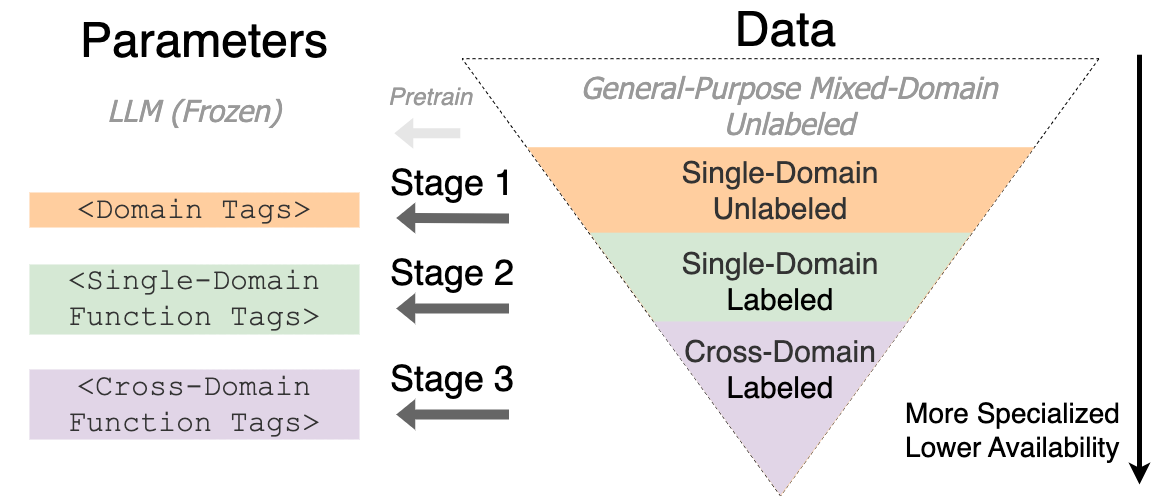

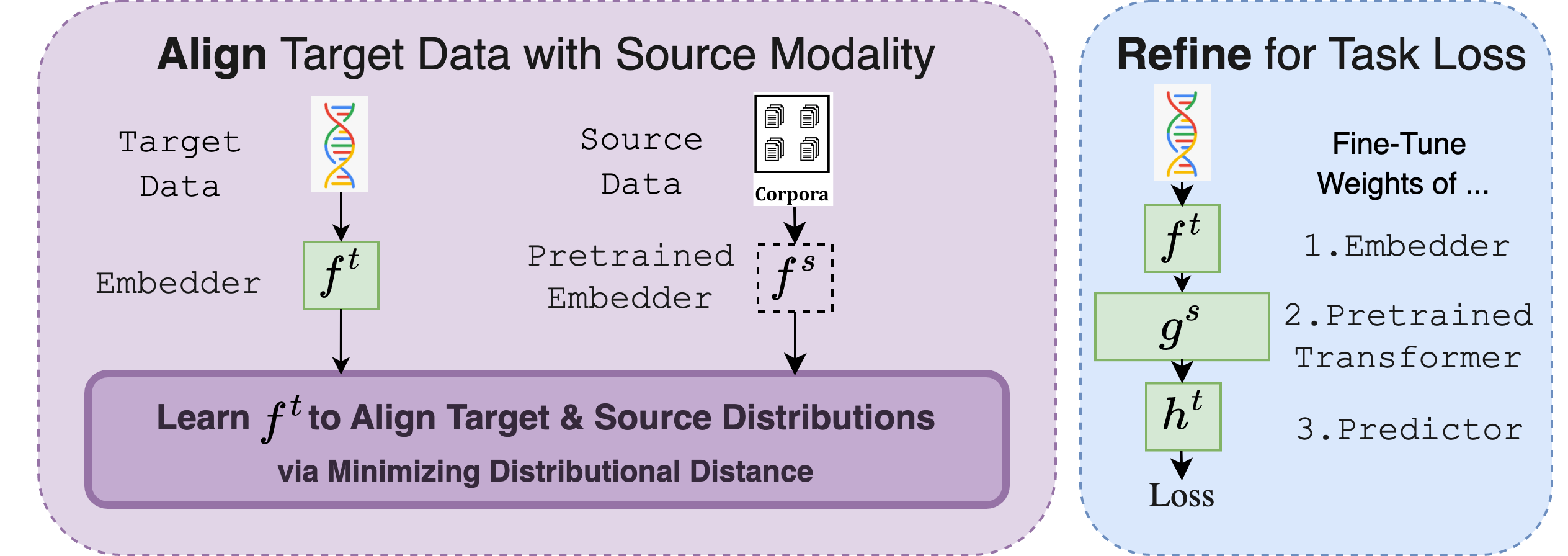

Tranfer learning to scientific domains: how can we leverage existing large-scale pretrained models effectvely for solving problems that are not within the model’s pretraining domain and modality?

Talks

- Cross-Modal Fine-Tuning, AI4Science Talks, March 20, 2023.

- DASH: How to Search Over Convolutions, The AutoML Podcast, December 19, 2022.

- Tackling Diverse Tasks with Neural Architecture Search, Deep Learning Machine Learning Journal Club, Mayo Clinic, October 17, 2022.

Publications

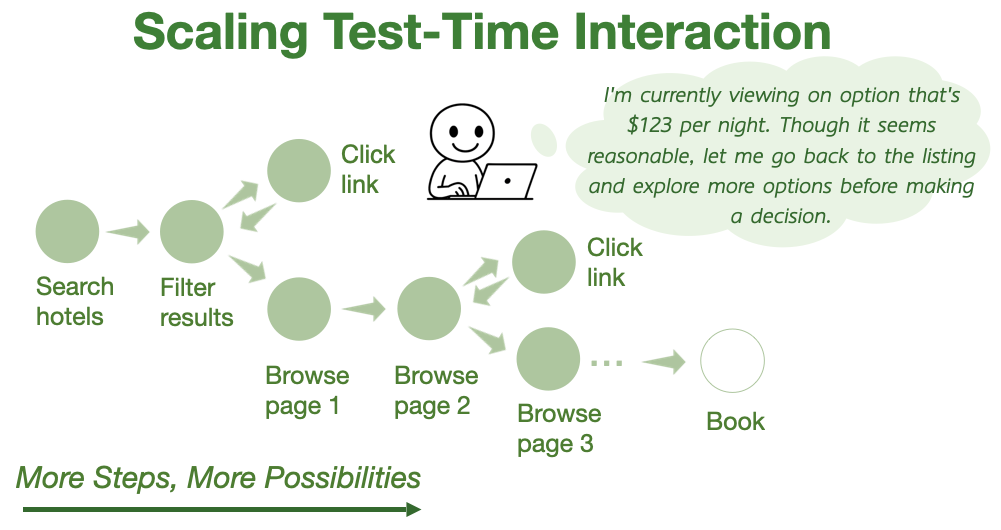

Thinking vs. Doing: Agents that Reason by Scaling Test-Time Interaction

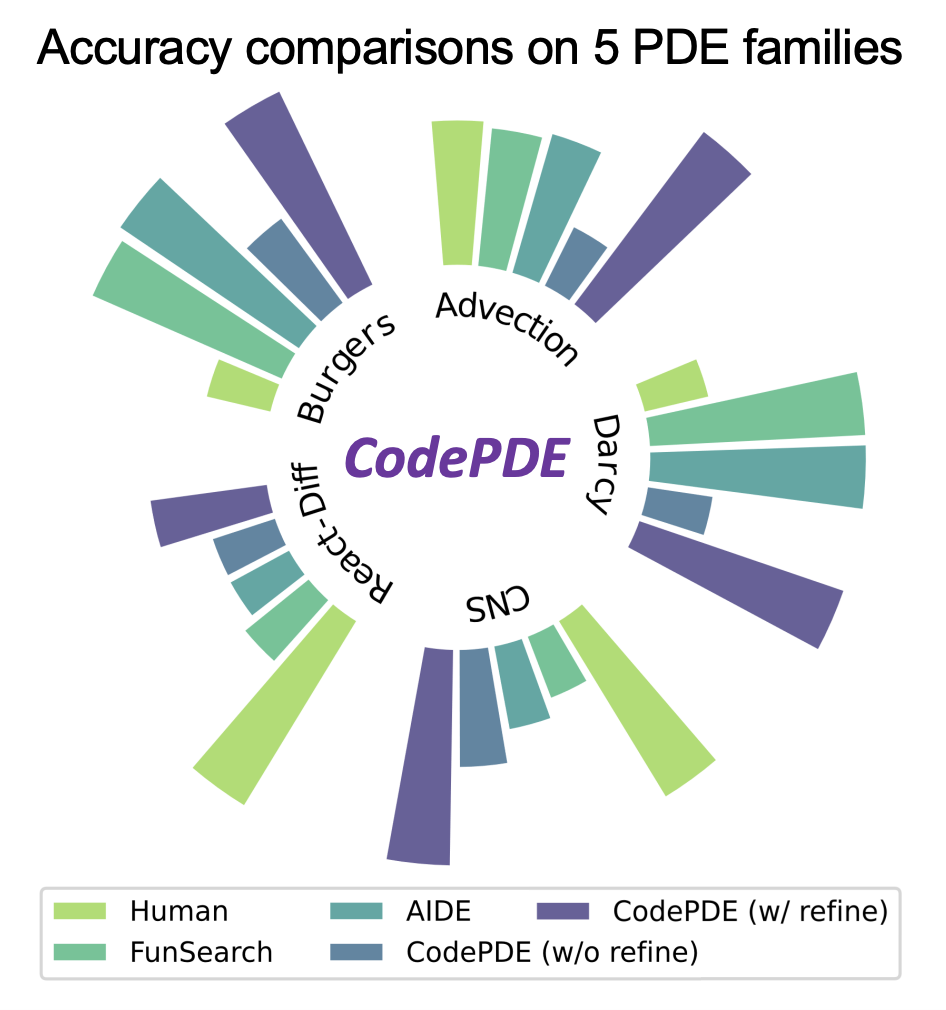

CodePDE: Benchmarking LLMs' Abilities to Solve PDEs through Code Generation

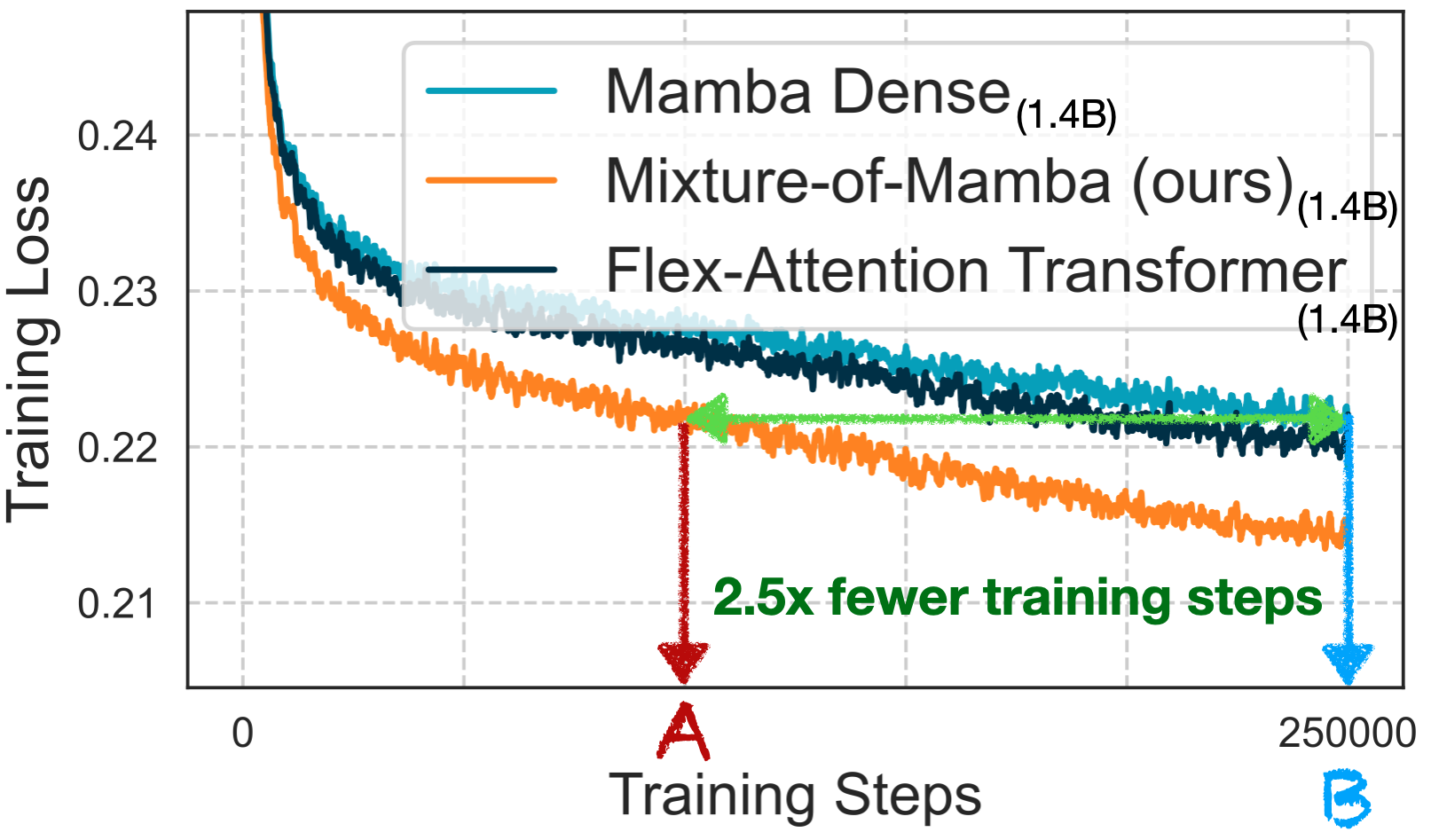

Mixture‑of‑Mamba: Enhancing Multi‑Modal State‑Space Models with Modality‑Aware Sparsity

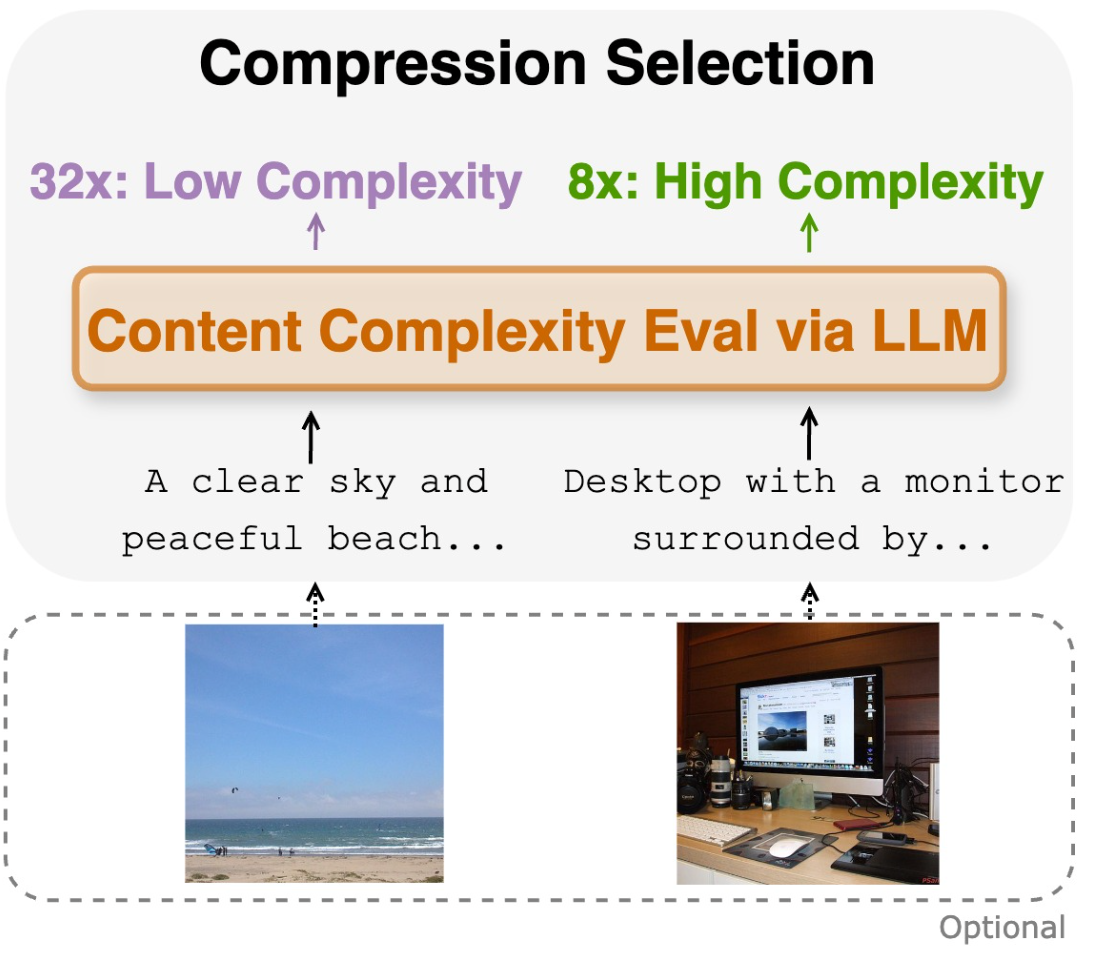

CAT: Content-Adaptive Image Tokenization

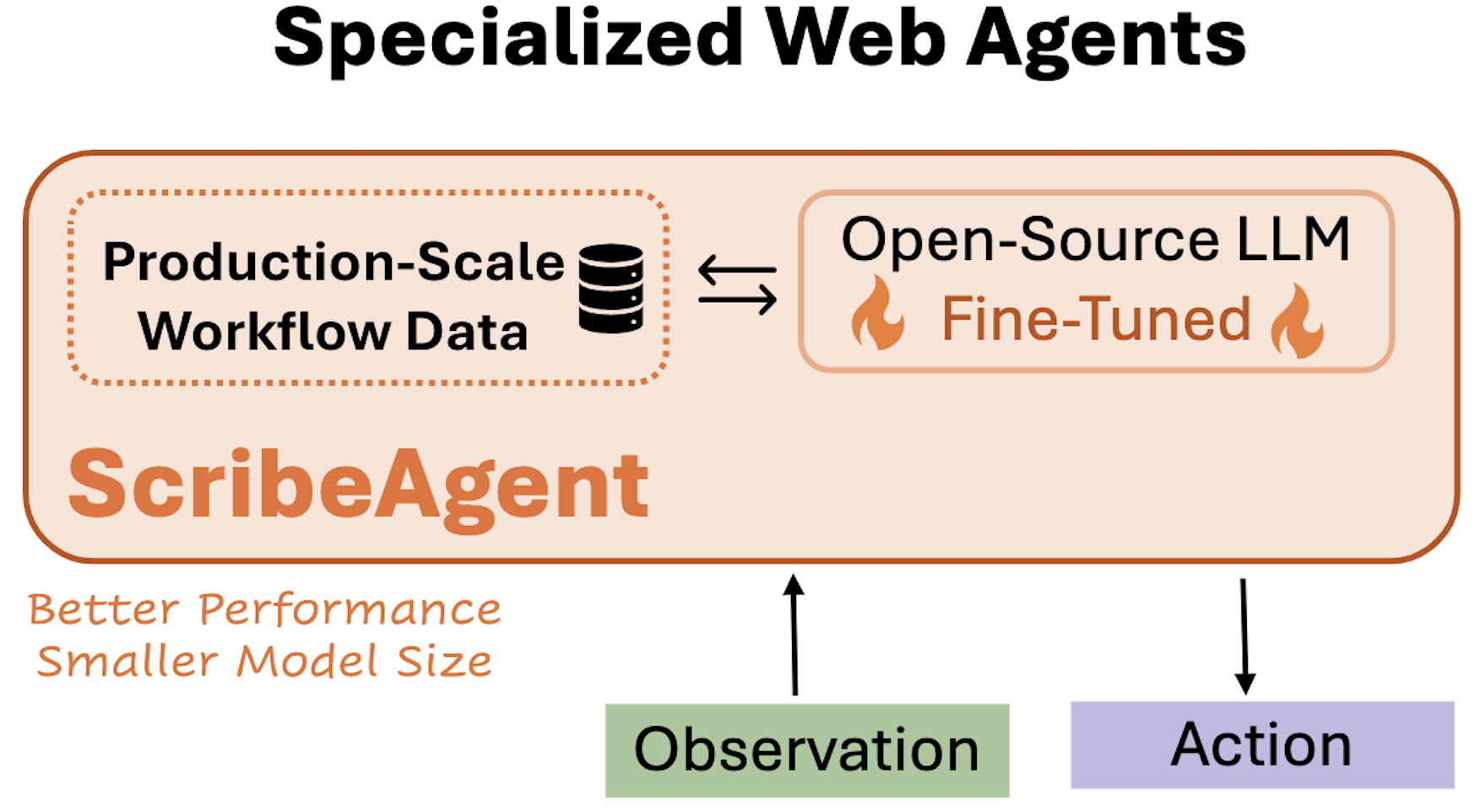

ScribeAgent: Towards Specialized Web Agents Using Production-Scale Workflow Data

Specialized Foundation Models Struggle to Beat Supervised Baselines

UPS: Efficiently Building Foundation Models for PDE Solving via Cross-Modal Adaptation

Tag-LLM: Repurposing General-Purpose LLMs for Specialized Domains

Cross-Modal Fine-Tuning: Align then Refine

Efficient Architecture Search for Diverse Tasks

NAS-Bench-360: Benchmarking Neural Architecture Search on Diverse Tasks

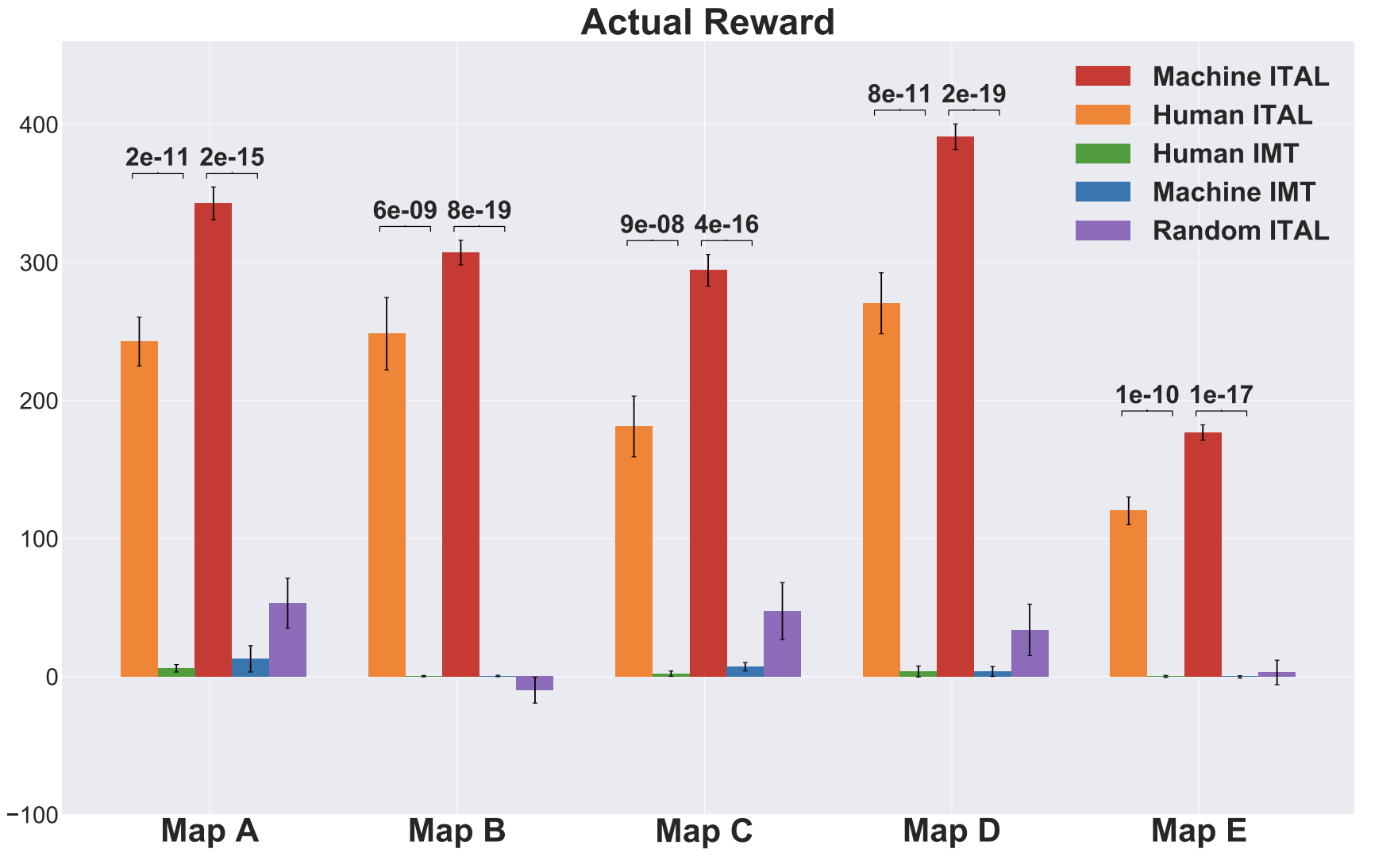

Iterative Teacher-Aware Learning

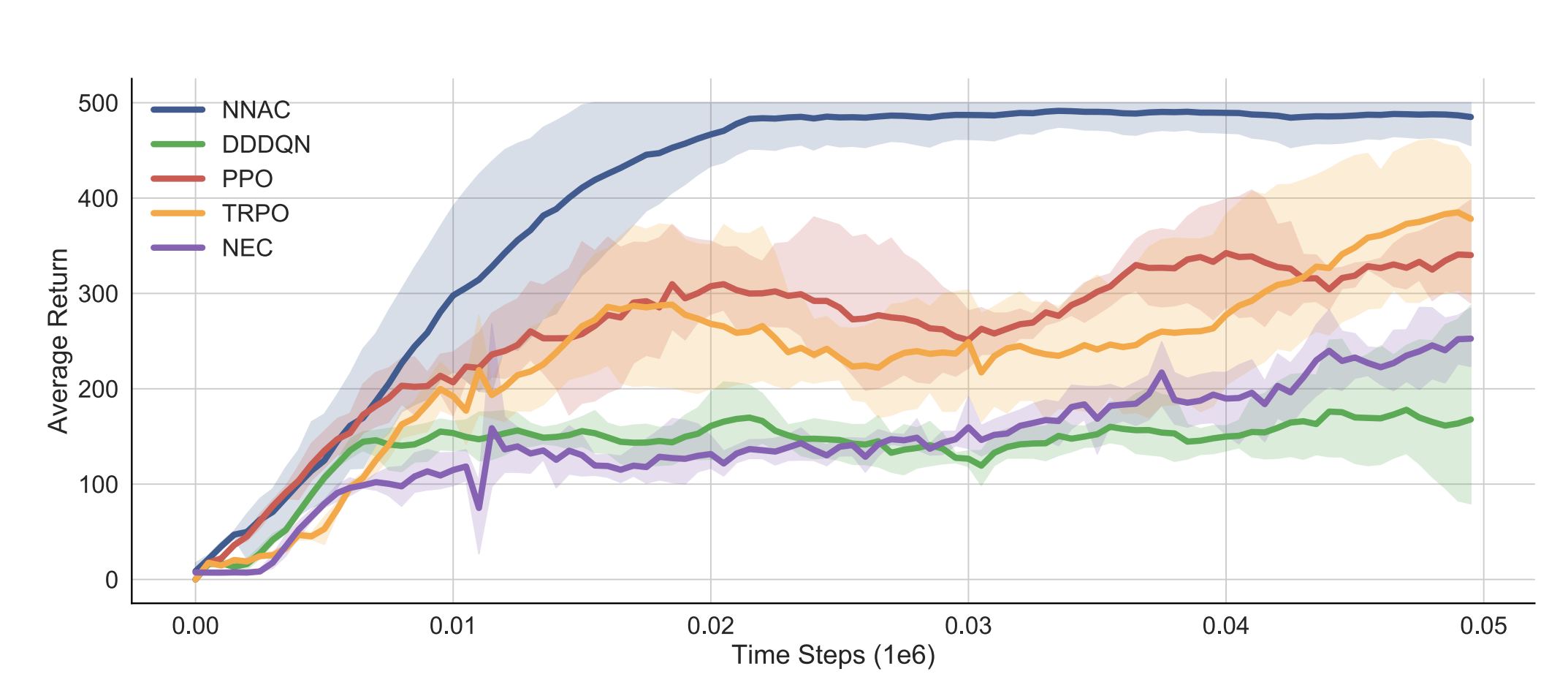

Theoretically Principled Deep RL Acceleration via Nearest Neighbor Function Approximation

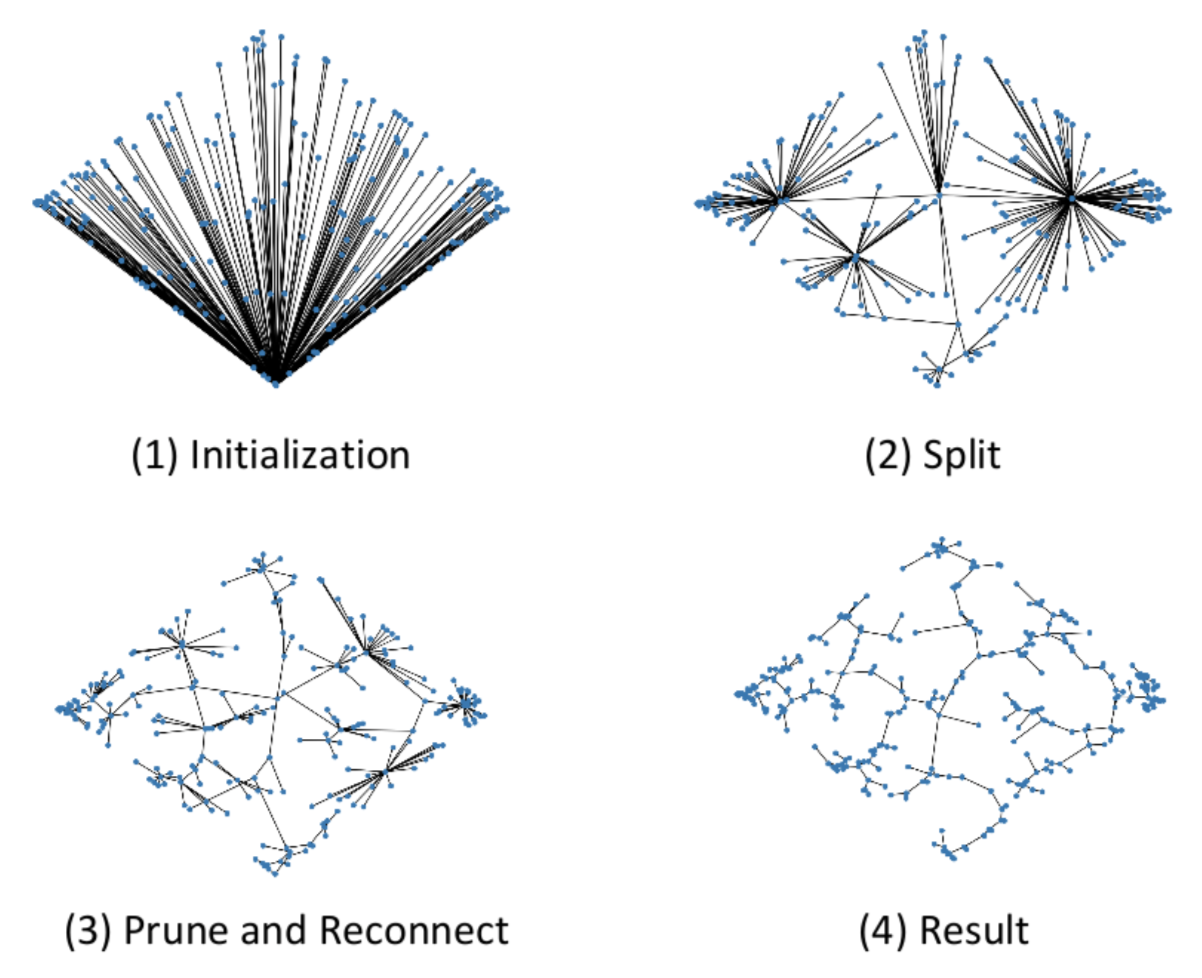

Mathematical Reconstruction of Patient-Specific Vascular Networks Based on Clinical Images and Global Optimization

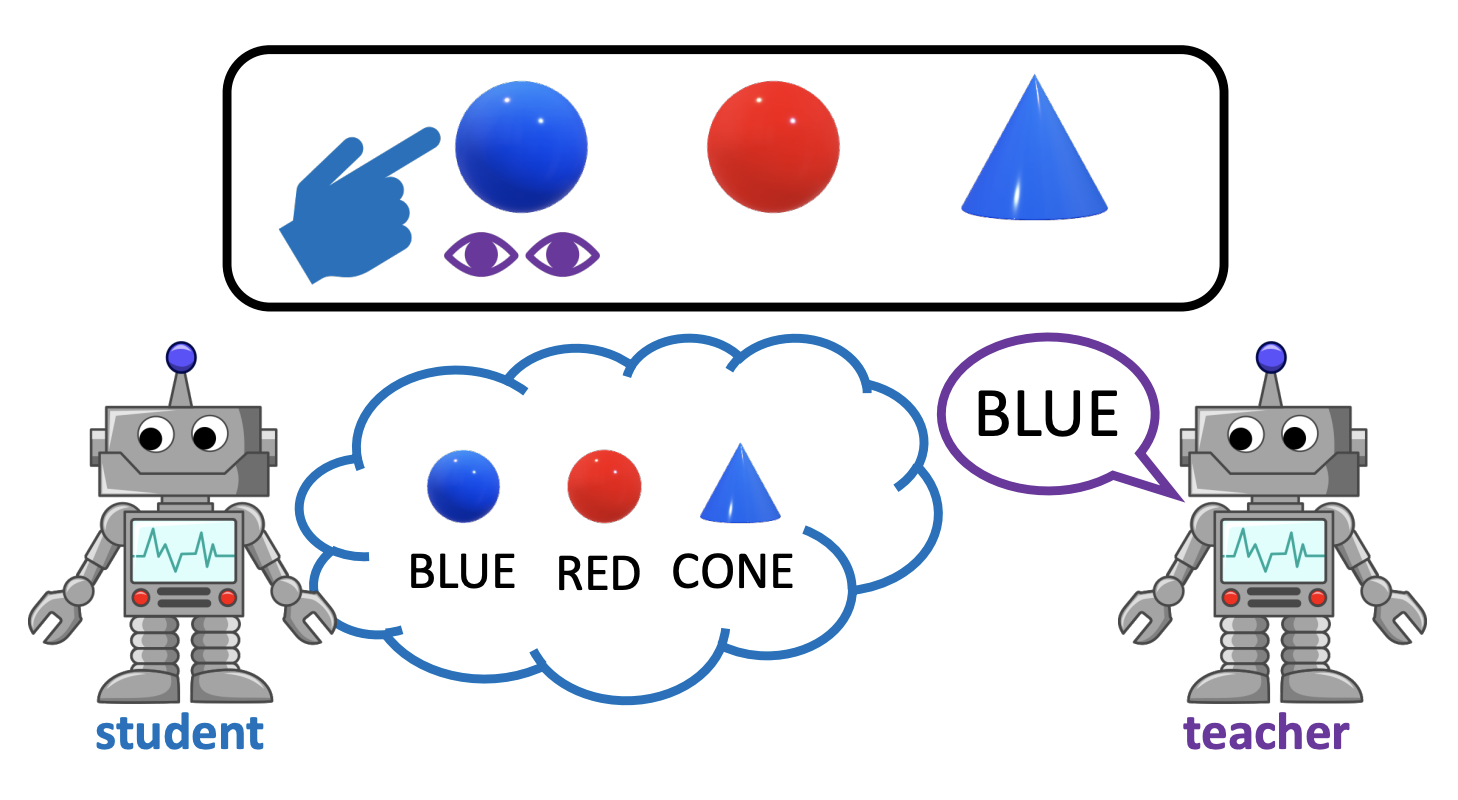

Emergence of Pragmatics from Referential Game between Theory of Mind Agents

Cite Thinking vs. Doing: Agents that Reason by Scaling Test-Time Interaction

@misc{shenbai2025tti,

title={Thinking vs. Doing: Agents that Reason by Scaling Test-Time Interaction},

author={Junhong Shen and Hao Bai and Lunjun Zhang and Yifei Zhou and Amrith Setlur and Shengbang Tong and Diego Caples and Nan Jiang and Tong Zhang and Ameet Talwalkar and Aviral Kumar},

year={2025},

eprint={2506.07976},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2506.07976},

}Cite CodePDE: Benchmarking LLMs' Abilities to Solve PDEs through Code Generation

@misc{li2025codepde,

title={CodePDE: An Inference Framework for LLM-driven PDE Solver Generation},

author={Shanda Li and Tanya Marwah and Junhong Shen and Weiwei Sun and Andrej Risteski and Yiming Yang and Ameet Talwalkar},

year={2025},

eprint={2505.08783},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2505.08783},

}Cite Mixture‑of‑Mamba: Enhancing Multi‑Modal State‑Space Models with Modality‑Aware Sparsity

@misc{liangshen2025mixtureofmamba,

title={Mixture-of-Mamba: Enhancing Multi-Modal State-Space Models with Modality-Aware Sparsity},

author={Weixin Liang and Junhong Shen and Genghan Zhang and Ning Dong and Luke Zettlemoyer and Lili Yu},

year={2025},

eprint={2501.16295},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2501.16295},

}Cite CAT: Content-Adaptive Image Tokenization

@misc{shen2024adaptivetokenizer,

title={CAT: Content-Adaptive Image Tokenization},

author={Junhong Shen and Kushal Tirumala and Michihiro Yasunaga and Ishan Misra and Luke Zettlemoyer and Lili Yu and Chunting Zhou},

year={2025},

eprint={2501.03120},

archivePrefix={arXiv},

primaryClass={cs.CV},

}Cite ScribeAgent: Towards Specialized Web Agents Using Production-Scale Workflow Data

@misc{shen2024scribeagent,

title={ScribeAgent: Towards Specialized Web Agents Using Production-Scale Workflow Data},

author={Junhong Shen and Atishay Jain and Zedian Xiao and Ishan Amlekar and Mouad Hadji and Aaron Podolny and Ameet Talwalkar},

year={2024},

eprint={2411.15004},

archivePrefix={arXiv},

primaryClass={cs.CL},

}Cite Specialized Foundation Models Struggle to Beat Supervised Baselines

@misc{xu2024specializedfm,

title={Specialized Foundation Models Struggle to Beat Supervised Baselines},

author={Zongzhe Xu and Ritvik Gupta and Wenduo Cheng and Alexander Shen and Junhong Shen and Ameet Talwalkar and Mikhail Khodak},

year={2024},

eprint={2411.02796},

archivePrefix={arXiv},

primaryClass={cs.LG},

}Cite UPS: Efficiently Building Foundation Models for PDE Solving via Cross-Modal Adaptation

@misc{shen2024ups, title={UPS: Efficiently Building Foundation Models for PDE Solving via Cross-Modal Adaptation},

author={Junhong Shen and Tanya Marwah and Ameet Talwalkar},

year={2024},

eprint={2403.07187},

archivePrefix={arXiv},

primaryClass={cs.LG}

}Cite Tag-LLM: Repurposing General-Purpose LLMs for Specialized Domains

@misc{shen2024tagllm,

title={Tag-LLM: Repurposing General-Purpose LLMs for Specialized Domains},

author={Junhong Shen and Neil Tenenholtz and James Brian Hall and David Alvarez-Melis and Nicolo Fusi},

year={2024},

eprint={2402.05140},

archivePrefix={arXiv},

primaryClass={cs.LG}

}Cite Cross-Modal Fine-Tuning: Align then Refine

@misc{shen2023orca,

author = {Shen, Junhong and Li, Liam and Dery, Lucio M. and Staten, Corey and Khodak, Mikhail and Neubig, Graham and Talwalkar, Ameet},

title = {Cross-Modal Fine-Tuning: Align then Refine},

publisher = {ICML},

year = {2023},

url = {https://arxiv.org/abs/2302.05738}

}Cite Efficient Architecture Search for Diverse Tasks

@inproceedings{shen2022efficient,

title={Efficient Architecture Search for Diverse Tasks},

author={Shen, Junhong and Khodak, Mikhail and Talwalkar, Ameet},

booktitle={Advances in Neural Information Processing Systems (NeurIPS)},

year={2022}

}Cite NAS-Bench-360: Benchmarking Neural Architecture Search on Diverse Tasks

@inproceedings{nasbench360,

title={NAS-Bench-360: Benchmarking Neural Architecture Search on Diverse Tasks},

author={Renbo Tu and Nicholas Roberts and Mikhail Khodak and Junhong Shen and Frederic Sala and Ameet Talwalkar},

booktitle={Advances in Neural Information Processing Systems (NeurIPS) Datasets and Benchmarks Track},

year={2022}

}Cite Iterative Teacher-Aware Learning

@inproceedings{yuan2021iterative,

title={Iterative Teacher-Aware Learning},

author={Luyao Yuan and Dongruo Zhou and Junhong Shen and Jingdong Gao and Jeffrey L. Chen and Quanquan Gu and Ying Nian Wu and Song-Chun Zhu},

booktitle={Advances in Neural Information Processing Systems (NeurIPS)},

year={2021}

}Cite Theoretically Principled Deep RL Acceleration via Nearest Neighbor Function Approximation

@inproceedings{Shen2021TheoreticallyPD,

title={Theoretically Principled Deep RL Acceleration via Nearest Neighbor Function Approximation},

author={Junhong Shen and Lin F. Yang},

booktitle={AAAI},

year={2021}

}Cite Mathematical Reconstruction of Patient-Specific Vascular Networks Based on Clinical Images and Global Optimization

@article{shen2021reconstruction,

author={Shen, Junhong and Faruqi, Abdul Hannan and Jiang, Yifan and Maftoon, Nima},

journal={IEEE Access},

title={Mathematical Reconstruction of Patient-Specific Vascular Networks Based on Clinical Images and Global Optimization},

year={2021},

volume={9},

pages={20648-20661}

}Cite Emergence of Pragmatics from Referential Game between Theory of Mind Agents

@article{Yuan2020EmergenceOP,

title={Emergence of Pragmatics from Referential Game between Theory of Mind Agents},

author={Luyao Yuan and Zipeng Fu and Jingyue Shen and Lu Xu and Junhong Shen and Song-Chun Zhu},

journal={NeurIPS 2019 Workshop on Emergent Communication},

year={2019}

}